Dynamic DNS for Docker using Containerbuddy and CloudFlare

Easier integration now available

Joyent introduced Triton Container Name Service (CNS) on 8 March 2016 along with an updated version of our modern application blueprint demonstrating how to run Node.js applications in Docker with Nginx and Couchbase.

Triton CNS eliminates the need to run the CloudFlare watcher container described here. See the CNS readme for details about how to use CloudFlare with CNS.

Containerbuddy is now ContainerPilot

Containerbuddy has been renamed ContainerPilot to better align with autopilot pattern. ContainerPilot makes implementing autopilot pattern applications straightforward and easy.

Mentions of Containerbuddy in this post should be read as references to ContainerPilot.

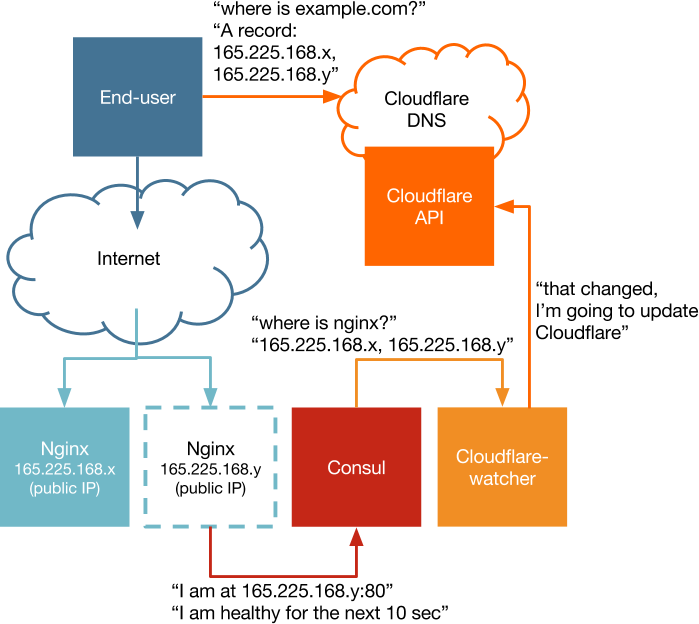

In a container-native project, we need to balance the desire for ephemeral infrastructure with the requirement to provide a predictable load-balanced interface with the outside world. By updating DNS records for a domain based on changes in the discovery service, we can make sure our users can reach the load-balancer for our project at all times.

As discussed in a previous post, we can use Containerbuddy to listen for changes to an application and make updates to a downstream service. This time, instead of making changes to our load balancing tier based on the changes in the application servers, we're going to push changes to DNS records via the CloudFlare API based on changes in the load balancing tier.

Updates via Containerbuddy

In our application, we'll be using Nginx as our stateless load-balancing tier. We could just as easily use HAProxy here, but for purposes of the example it's easier if we have a web server serving a static file. Containerbuddy in the Nginx containers will update Consul with the containers' public IP. We'll have a container named cloudflare that will use Containerbuddy to watch for changes in the Nginx service.

The cloudflare container will have a Containerbuddy onChange handler that updates CloudFlare via their API. The handler is just a bash script that queries the CloudFlare API for existing A records, and then diffs these against the IP addresses known to Consul. If there's a change, we add the new records first and then remove any stale records.

Running the example

You can find a demonstration of this architecture in the example directory of the Github repo. Running this example on your own requires that you have a CloudFlare account and a domain that you've delegated DNS authority to CloudFlare. Note that if you just want to try it out without actually updating your DNS records you can go through the whole process of getting CloudFlare in front of your site (on their free tier) and so long as you don't update your nameservers with your registrar there will be no actual changes to the DNS records seen by the rest of the world. Once you're ready:

- Get a Joyent account and add your SSH key.

- Install the Docker Toolbox (including

dockeranddocker-compose) on your laptop or other environment, as well as the Joyent CloudAPI CLI tools (including thesmartdcandjsontools) - Have your CloudFlare API key handy.

- Configure Docker and Docker Compose for use with Joyent:

curl -O https://raw.githubusercontent.com/joyent/sdc-docker/master/tools/sdc-docker-setup.sh && chmod +x sdc-docker-setup.sh./sdc-docker-setup.sh -k us-east-1.api.joyent.com ~/.ssh/ At this point you can run the example on Triton:

cd ./examplesmake .env./start.shor in your local Docker environment:

cd ./examplesmake# at this point you'll be asked to fill in the values of the .env# file and make will exit, so we need to run it againmake./start.sh -f docker-compose-local.ymlThe .env file that's created will need to be filled in with the values describe below:

CF_API_KEY=CF_AUTH_EMAIL=CF_ROOT_DOMAIN=SERVICE=nginx RECORD=TTL=600 The Consul UI will launch and you'll see the Nginx node appear. The script will also open your CloudFlare control panel at https://www.cloudflare.com/a/dns/example.com (using your own domain, of course) and then you'll see the domain or subdomain you provided in the .env file.

Let's scale up the number of nginx nodes:

docker-compose scale nginx=3As the nodes launch and register themselves with Consul, you'll see them appear in the Consul UI. You'll also see the A records in your CloudFlare console update.

Although we remove stale records after we add new records, in a production environment we won't want to simply swap out one of our Nginx containers for another immediately. Because clients or recursive DNS resolvers will be respecting the TTL of our A record, we'll want to add a new container, wait for DNS propagation (you can do this by monitoring traffic flow), and only once the new container is receiving traffic remove the old container.

The problem is that if we simply remove the old container we'll have a period of lost traffic between the time we remove the container and the TTL expires. We need a way to signal Containerbuddy to mark the node for planned maintenance. I'll circle back to that in a revision to Containerbuddy and discuss this change in an upcoming post.

If you've been following the request-for-discussion on the Triton Container Naming Service (TCNS) you'll know that Joyent is working on adding a feature to our platform that will simplify some of what we've discussed here. When that feature is available, we can simply add a CNAME in our external DNS provider that points to the TCNS-assigned name for the service. This example has an added advantage of connecting our containers to a global CDN for faster performance.

Next steps

So far in this series I've talked about the expectations of container-native applications, introduced Containerbuddy as a means of shimmming existing applications, dynamic load balancing via Nginx, and now updating DNS providers so we can have zero-downtime deploys. In upcoming posts I'll tie these components together into a production-ready multi-tier application.

Video overview

The following video offers a walkthrough of how to automate application discovery and configuration using Containerbuddy and demonstrates the process in the context of a complete, Dockerized application.

Post written by Tim Gross