Anti-patterns for service discovery in Docker

Most applications, certainly most Dockerized applications, are made up of services running in a number of containers. Connecting those containers to each other is one of the most difficult aspects of Dockerizing any application, in no small part because it forces us, the developers, to design for automated service discovery and configuration that we'd often been able to gloss over previously. How we do that and where it fits in our application stack are areas of rapid development and experimentation, but we've learned enough to identify some anti-patterns we must end and solutions we can build forward on.

Local proxies

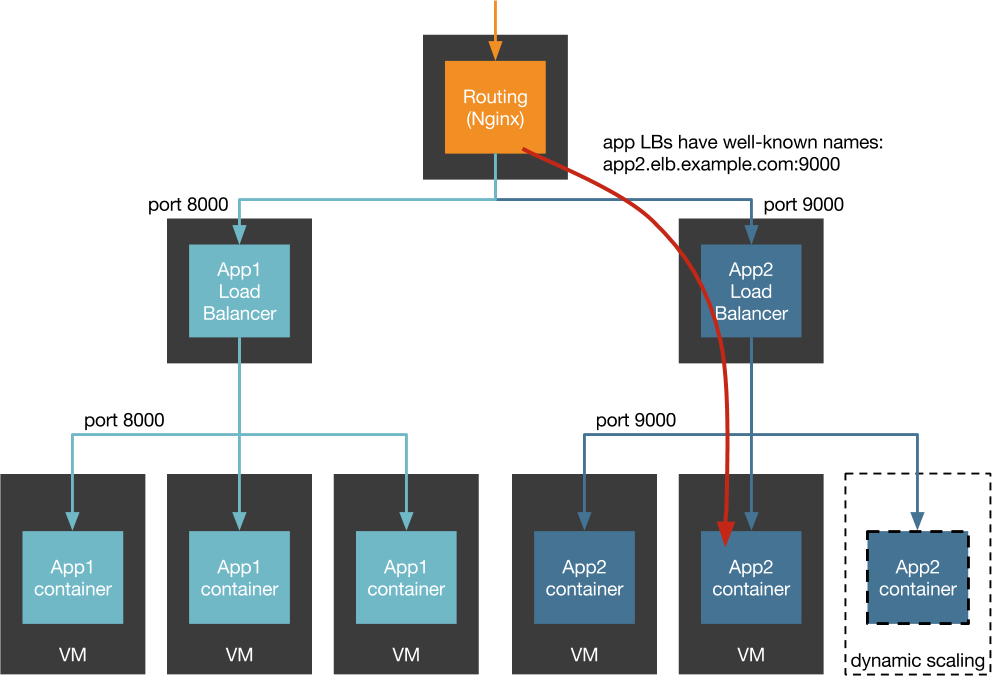

A common anti-pattern used for HTTP microservices is to have a load balancing service fronting each stateless microservice. This LB has a well-known name and port within the context of the application. Ex. app1.mycompany.example.com:8080. This allows for dynamic scaling of backend servers for an application; they need only register themselves with the load balancer to become available across the entire project. Downstream applications only need to know the DNS name of the load balancer, and if we need to reroute traffic away from the upstream application it's a matter of changing a DNS entry and waiting for it to propagate. The load balancer makes periodic health checks of the application instances and removes them if they become unhealthy.

There are several problems with this architecture.

Utilization: The hidden assumption of this architecture is that we have one container per VM. If we provision multiple containers per VM to improve utilization, then we need to configure the LB to listen for multiple ports and the question of how health checks are supposed to work becomes complicated. Do we remove the entire node because one of its services dies? Do we need an instance of every service on each VM or can we route some ports to only some instances? Do we even have that option if we're using a managed LB?

Networking Inefficiency: We've introduced an extra network hop for every single internal request. We can avoid the overhead of NAT on the VM if we use the --host networking option for our Docker containers. Indeed that's probably the only way to make a managed LB work but it makes the utilization question even worse because we can't have multiple application container instances listening on the same port on the same VM. In any case, we still have an extra over-the-wire connection to make in order to serve for every request.

SPoF: The load balancer becomes a single point of failure for the application, and if you're relying on a managed service for LB then the LB can fail in conditions that your application will not (ex. AWS ELBs are built on Elastic Block Store, and so you can be affected by an EBS failure even if all your AWS EC2 instances are using the instance-store backed VMs).

DNS: DNS provides an enormous amount of developer simplicity in terms of discovery -- just hard-code a CNAME in your environment variables and you're good-to-go. But DNS isn't suitable for discovery in applications where multiple hosts are behind a single record but where we need to be able to access individual hosts. So you can't use DNS for load balancing except for simple round-robin. Additionally, many applications have no way of reloading cached DNS entries to pick up changes; in some web frameworks changing the DNS entry for a DB connection requires restarting the whole application as a result.

Health checking: If an application instance is unable to respond to remote health checks, then the load balancer will remove that application instance from the pool. But if the application instance is unable to respond because it is under heavy load or is in a blocking state (ex. with Node.js performing computationally-heavy work or Rails unicorn workers waiting on long-running queries), then the load balancer may be removing instances just when the service needs more instances in the pool. Scaling up additional VM capacity takes on the order of minutes, and any new instances will be coming live in a state where there are still not enough nodes marked as healthy to serve queued requests. There is actually enough capacity available to run the service but the LB is "helpfully" not leaving instances marked healthy long enough to take up the load and the service is dead in the water until the health checks are turned off temporarily by a bleary-eyed operator at 2AM.

Layers: Like onions and ogres, this architecture has many layers (of orchestration). The life-cycle of the VMs in particular is a lot of extra work for little-to-no payoff in terms of business value.

The scheduler-backed variant

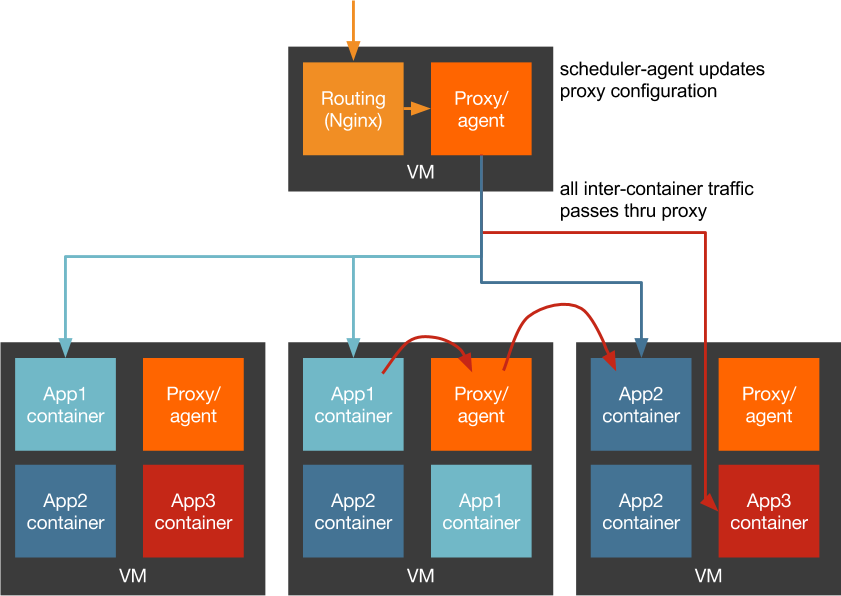

A common variant of the pattern described above breaks down the discrete load balancer into an on-host proxy and manages the proxy with the application scheduler. In this scenario, an agent on the host communicates with a discovery service and/or scheduler. The agent updates the configuration of a local proxy like HAProxy and then application containers only ever send connections to this proxy.

This somewhat improves the utilization problem at the cost of a lot of complexity. We still have to manage the separate life-cycles of VMs and application containers, and we've expanded the number of containers we have to manage greatly. We've solved the cascading failure caused by overloaded containers, but we still end up deploying health check code for each application to the agent container.

We also haven't entirely solved the networking problem. We've rid ourselves of one hop over the LAN, but containers don't have their own IP. So we have a local proxy we have to pass through for outbound requests and all packets are being NAT'd through the Docker bridge interface. This may be an improvement over the first design but we can do better!

The container-native alternative

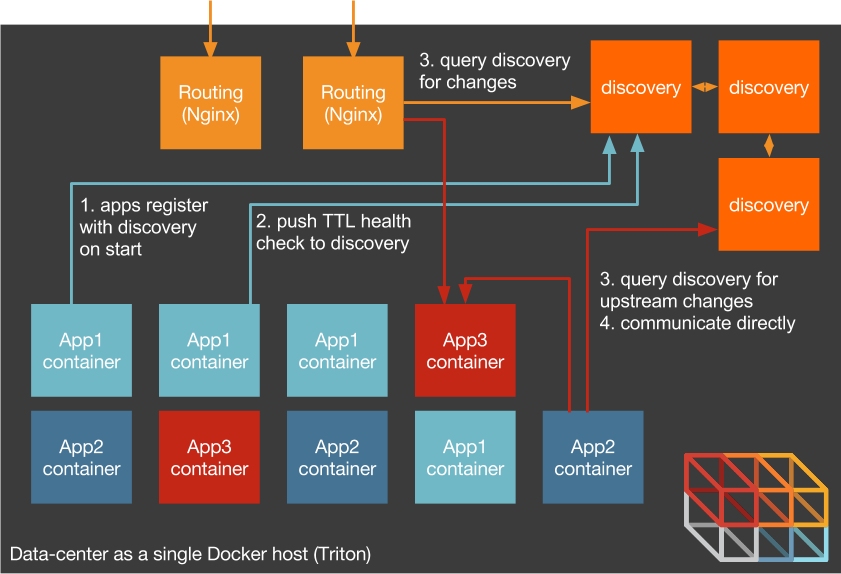

In a container-native environment, we can remove the middleman if we require applications to talk to a source of truth to discover their upstream services and tell their downstream services where to find them. This pushes the responsibility for the application topology away from the network infrastructure and into the application itself where it belongs -- only the application can have the full awareness of its state necessary to manage itself.

Note importantly here that without the notion of host-local services we can free ourselves of the VM. Application containers can be physically located wherever our placement engine decides and this placement is entirely separate of the scheduling of work. That is, our application can now be in charge of scheduling and the infrastructure can be in charge of resource allocation.

We will still need a registry of services where application containers can report in and discover their upstreams. In a microservices environment, the best place for this registry is in a highly-available clustered data store like Consul, Zookeeper, or Etcd. (If we have a clustered database like Couchbase or Elasticsearch, nodes can usually discover each other on their own, but that doesn't help the downstream services that need to consume the DB's data.)

There's no doubt that this architecture introduces some additional responsibilities to the application. An application container should:

- At startup, register itself as a member of a given service with the discovery service.

- Introspect itself to determine whether it's healthy.

- Send periodic heartbeat messages to the discovery service so that the discovery service knows whether it is healthy.

- Check-in periodically with the discovery service to see if changes have been made to upstream services.

- Cause the application to reload its configuration or otherwise respond properly to changes in the upstream services.

Some might want to divest this responsibility to a "sidecar" container. If we move these functions into the sidecar then, the thinking goes, we don't have to change our application at all. But the sidecar container needs the ability to reach into the application container or the application container needs to expose its management interface outside the isolation of the container. Neither option is suited for multi-tenant security.

Making the application container responsible for determining and reporting its own health is an important change from our anti-pattern architecture described above. This allows for application-aware health checks and even health checks that take into account the cluster health. If we wanted to push this function into the discovery service (or again, a sidecar container), then the discovery service would need to know how to perform these checks for every application. This means deploying application-specific code to the discovery service and intertwining their deployment cycles.

Additionally, although this mostly works when the application is a simple HTTP service, determining the health of a non-HTTP application like a database might be unanswerable with 200 OK. More importantly, this can prevent the runaway booting-from-the-LB effect. Instances that are merely slow to respond won't be removed just when the added capacity is needed.

When the application has responsibility for understanding the topology, it can respond more quickly to changes. We can potentially even push cluster state changes to an application instance rather than polling the discovery service, allowing application containers to act far more quickly than they would if waiting for a DNS TTL.

In an upcoming series of posts, I'll be talking more about this architecture, how to make it work with existing applications, and demonstrating some examples.

Updated material

The anti-patterns described here remain a real problem to watch out for, but ContainerPilot simplifies the process of active service discovery.

The ContainerPilot overview video demonstrates how to use it to automate application discovery and configuration for autopilot operations. The ContainerPilot 2.0 example implementation offers detailed instruction on building autopilot pattern applications.

Post written by Tim Gross