Secrets management in the Autopilot Pattern

No matter how we deploy our applications, managing secrets invokes the chicken-and-the-egg problem. Any system for secrets management has to answer the question of how the application authenticates to the system managing the secrets in the first place. Applications built with the Autopilot Pattern can be easily made to leverage secrets management services, but it's important to understand the security model and threat model of these services.

Traditionally secrets have been managed by expensive and non-portable solutions (such as hardware security modules, HSMs), or by encrypted blobs in configuration management that require manual intervention (such as Chef encrypted data bags). Hashicorp's Vault provides an alternative that is portable to any infrastructure. Vault provides an API for storing and accessing secrets well-suited for autonomous applications like those using the Autopilot Pattern.

Vault provides encryption at rest for secrets, encrypted communication of those secrets to clients, and role-based access control and auditability for secrets. And it does so while allowing for high-availability configuration with a straightforward single-binary deployment. See the Vault documentation for details on their security and threat model.

Chicken-and-the-Egg

Vault uses Shamir's Secret Sharing to control access to the "first secret" that we use as the root of all other secrets. A master key is generated automatically and broken into multiple shards. A configurable threshold of k shards is required to unseal a Vault with n shards in total.

When Vault starts, it is in a "sealed" state. Much like the missile console from your favorite action movie involving submarines, multiple operators must "turn their key" to unseal the Vault. The operator(s) need to use the threshold number (k) of the n key shares, and of course this action is logged for later auditing. This model prevents a rogue member of the team from unsealing a Vault and creating new root keys.

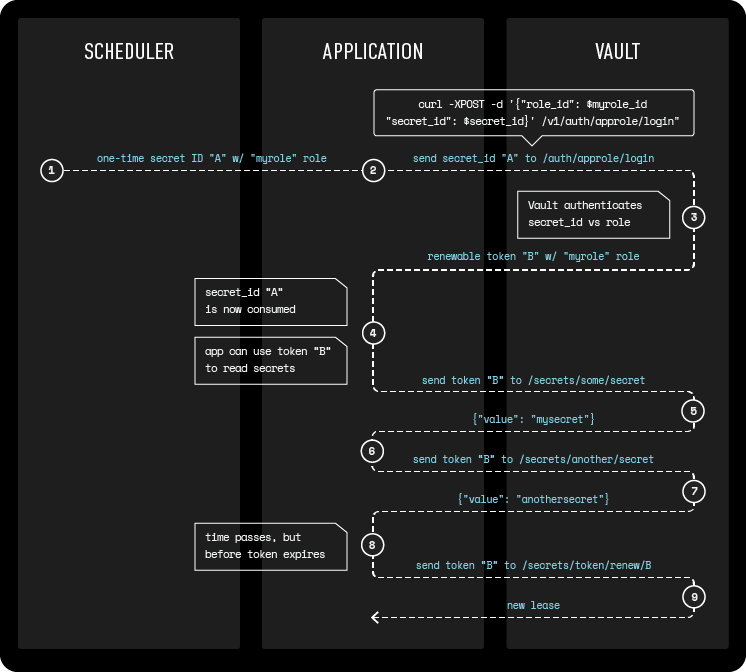

Once the Vault is unsealed, a root token is generated and used to set up role based access control to secrets. This process matches ACL policies with secrets (keys and their values) and authentication backends like tokens, certificates, or application roles. Vault allows for a rich set of workflow options, but for our containerized applications we'll use the AppRole backend. When a container is launched we'll provide it with a one-time use password (called a "secret ID"). The application uses this secret ID to log into Vault and receive a Vault token. This token is similar to a session cookie that we might use in a web application -- it gives the client access to resources in Vault (secrets) without having to send the secret ID "password" with every request. The Vault token expires periodically but can be renewed if policies are set to allow that.

Bootstrapping Vault

Before we can use Vault to manage secrets we need to deploy it. Our blueprint for Vault includes orchestration scripts that can help you bootstrap the Vault cluster and manage secrets with it, and it provides a proposed process for handling the initial secrets suitable for organizations and teams that don't already have a secrets management system in place.

This design uses Vault in an HA configuration with Consul as the storage backend. We'll deploy three containers, each including both Consul and Vault (with Vault running as a co-process managed by ContainerPilot). The Consul containers will use Triton CNS to bootstrap their own clustering as described in the Autopilot Pattern Consul blueprint. Vault will use its own leader election with request forwarding enabled -- when a request for a secret arrives at a standby instance it will be proxied to the leader. See the Vault high availability documentation for more details.

$ ./setup.sh up* Standing up the Vault cluster...docker-compose -f docker-compose.yml up -dCreating vault_vault_1docker-compose -f docker-compose.yml scale vault=3Creating and starting vault_vault_2 ... doneCreating and starting vault_vault_3 ... done* Waiting for Consul to form raft.........$Once the cluster is launched, we need to secure communications to the cluster and within the cluster. There are three streams of communication we want to encrypt: the Vault HTTP API, the Consul HTTP RPC, and the Consul gossip protocol. We'll need an SSL certificate for the HTTP APIs and a shared symmetric key for the Consul gossip encryption. Because we don't have an existing Vault in place, we can't use Vault to manage these secrets. Many organizations will want to issue certificates from their own certificate authority (CA) whereas other organizations might wish to use Let's Encrypt with the dns-01 challenge rather than the http-01 challenge (you probably don't want to expose Vault on the public internet!). The Vault blueprint provides tooling to create a local certificate authority on your workstation for demonstration purposes. Regardless of how we get the certificate and gossip key, we upload them to the Vault cluster and reload the Vault configuration.

$ ./setup.sh secure \ --tls-key secrets/vault.key.pem \ --tls-cert secrets/vault.cert.pem \ --ca-cert secrets/CA/ca_cert.pem --gossip secrets/gossip.keySecuring vault_vault_1... copying certificates and keys updating Consul and Vault configuration for TLS updating trusted root certificateSecuring vault_vault_2... copying certificates and keys updating Consul and Vault configuration for TLS updating trusted root certificateSecuring vault_vault_3... copying certificates and keys updating Consul and Vault configuration for TLS updating trusted root certificateRestarting cluster with new configurationRestarting vault_vault_3 ... doneRestarting vault_vault_2 ... doneRestarting vault_vault_1 ... doneNote that at this step if you check the logs on Vault containers you'll start seeing errors about "error checking seal status". Because the Vault isn't yet initialized we can't verify whether or not its unsealed and so the ContainerPilot health checks fail with this error as expected.

The next step is to initialize the Vault. This creates the master encryption key and splits it into multiple shards. Vault will encrypt each shard with one of our operators' PGP public keys, and then the operator who initialized the Vault will distribute those encrypted shard keys to the other operators for unsealing. In the example below, we're using three keys in total and accepting the default minimum of two keys to unseal. (If you want to try this out on your own non-production cluster you can just run ./setup.sh init and the script will generate and use a single key.)

$ ./setup.sh init --keys my.asc,yours.asc,theirs.ascUploading public keyfile my.asc to vault instanceUploading public keyfile yours.asc to vault instanceUploading public keyfile theirs.asc to vault instanceVault initialized.Created encrypted key file for my.asc: my.asc.keyCreated encrypted key file for yours.asc: yours.asc.keyCreated encrypted key file for theirs.asc: theirs.asc.keyInitial Root Token: 39fc6a84-7d9d-d23c-d42f-c8ee8f9dc3e2Distribute encrypted key files to operators for unsealing.In the ./secrets directory there will now be three .key files that contain the PGP-encrypted keys for each operator. Distribute these key files to the operators; because the keys are PGP encrypted this can even be done over email. At least two of them will need to take their key file and run the following:

$ ./setup.sh unseal secrets/my.asc.keyDecrypting key. You may be prompted for your key password...gpg: encrypted with 2048-bit RSA key, ID 01F5F344, created 2016-12-06 "Example User " 31dc41981e3207ed57ce4ccd83676ff3299cf00bd4c6c3eb7234c4c7ec54c471Use the unseal key above when prompted while we unseal each Vault node...Key (will be hidden):Sealed: trueKey Shares: 3Key Threshold: 1Unseal Progress: 0... You'll be asked to provide the unseal key for each of the nodes. When we're done we should be able to run vault status on one of the nodes and see something like the following:

$ docker exec -it vault_vault_1 vault statusSealed: falseKey Shares: 3Key Threshold: 3Unseal Progress: 0Version: 0.6.4Cluster Name: vault-cluster-e9f3b9fcCluster ID: 55cf8a98-1887-6bdc-c0b8-e9fefe2fe770High-Availability Enabled: true Mode: active Leader: https://172.20.0.3:8200This is a good place to note that it's not quite turtles all the way down. When we unseal the Vault we're using the PGP keys that were generated by members of the team to encrypt the key shards. How your organization generates and handles those keys at the individual operator's workstation is a matter of internal procedure. All Vault operations are logged, so we always have an audit trail.

Using Vault in the Autopilot Pattern

To manage secrets in Vault with the Autopilot Pattern, we'll inject a one-time-use secret ID into the container's environment variables. Whatever you're using to launch the container (for example, your CI system or your container supervisor) will need a Vault role that allows it to issue new secret IDs. We don't need to worry about this token being lost or recorded in logs (a typical concern with environment variables) because it can only be used once.

Once the container has started, the ContainerPilot preStart will use the one-time-use secret ID from the environment variable to log in to Vault using the application's AppRole and receive a renewable Vault token it can use to fetch database credentials, certificates, or other secrets.

Note that if we're using our own CA to sign certificates for Vault, we'll need a mechanism to distribute the intermediate CA certificate to containers. We should do this in our CI system when our containers are built.

Using TLS between containers in the Autopilot Pattern has an extra complication. Because we're not using DNS for service discovery but instead are using the IP addresses we get from the discovery backend (ex. Consul), each container needs its own TLS certificate. In that scenario, we can have each container use Vault's PKI secrets backend to request certificates for itself during the ContainerPilot preStart and this will let us encrypt all internal traffic. We can't do this for Vault itself of course, so we should use Triton CNS to give Vault a DNS name and issue a certificate for that. This is similar to how we're using CNS to give a DNS name for Consul in the various Autopilot Pattern examples.

Jenkins and Vault

Let's walk through an example of how we might use our Vault deployment to manage secrets for our continuous integration (CI) process. CI servers like Jenkins hold the keys to the kingdom in modern software organizations; an attacker with access to the CI server could use the CI server to deploy their own code using our testing infrastructure or even subtly alter our application builds. If we want to use Jenkins for CI and testing of our Autopilot Pattern applications on Triton, Jenkins needs to have access to SSH keys with the rights to launch containers, as well as GitHub shared secrets for web hooks and GitHub deploy keys for private repositories. You can follow along the example below with our Autopilot Pattern Jenkins blueprint.

In this example, we'll deploy Jenkins with an Nginx reverse proxy. Jenkins will use ContainerPilot preStart to fetch its initial configuration from GitHub, and Nginx will use ContainerPilot and Let's Encrypt to give itself an SSL certificate so we have encrypted client communications to Jenkins.

The Jenkins container will use the one-time secret ID we pass into its environment to log in to Vault in the first-run.sh script that is executed by the ContainerPilot preStart. It uses this secret ID to login and fetch a renewable token, and uses that token to get all the secrets it needs and puts them in the expected places on disk. Here's an excerpt from the first-run.sh script showing how we setup Triton credentials:

echo 'setting up Triton credentials for launching Docker containers'curl -sL \ --cacert /usr/local/share/ca-certificates/ca_cert.pem \ -H "X-Vault-Token: ${jenkinsToken}" \ "https://vault:8200/v1/secret/jenkins/triton_cert" | \ jq -r .data.value | tr "\\n" "\n" > ${JENKINS_HOME}/.ssh/tritonchmod 400 ${JENKINS_HOME}/.ssh/tritonssh-keygen -y -f ${JENKINS_HOME}/.ssh/triton -N '' \> ${JENKINS_HOME}/.ssh/triton.pubecho 'running Triton setup script using your credentials...'bash /usr/local/bin/sdc-docker-setup.sh ${SDC_URL} ${SDC_ACCOUNT} ~/.ssh/tritonIn an ideal world our secrets would only ever exist in memory, but supporting arbitrary real-world applications often involves these sorts of compromises. We can limit exposure by ensuring that we never commit the Jenkins container to a registry once it contains secrets, and by rotating keys on a regular basis.

Once Jenkins has started, the Jenkins job named jenkins-jobs will fetch the jobs definitions from the repo given in the GITHUB_JOBS_REPO environment variable and use the reload-jobs.sh script that's included in our container image. For each job configuration it finds in the repository, this task will copy the job config to where Jenkins can find it and make a Jenkins API call to make this a new job as shown below:

for job in ${WORKSPACE}/jenkins/jobs/*; do local jobname=$(basename ${job}) local config=${WORKSPACE}/jenkins/jobs/${jobname}/config.xml # create a new job for each directory under workspace/jobs curl -XPOST -s -o /dev/null \ -d @${config} \ --netrc-file /var/jenkins_home/.netrc \ -H 'Content-Type: application/xml' \ http://localhost:8000/createItem?name=${jobname} # update jobs; this re-updates a brand new job but saves us the # trouble of parsing Jenkins output and is idempotent for a new # job anyways curl -XPOST -s --fail -o /dev/null \ -d @${config} \ --netrc-file /var/jenkins_home/.netrc \ -H 'Content-Type: application/xml' \ http://localhost:8000/job/${jobname}/config.xmldoneTry it yourself!

The Jenkins example we've shown here highlights how ContainerPilot and the Autopilot Pattern can be used to reduce the complexity of managing secrets with Vault. The mechanisms that application developers have to understand are relatively simple -- the application makes an API call with the one-time secret ID and then can get whatever secrets they need. Applications can operate without human intervention while at the same time access to secrets can be controlled, revoked, and audited. Try out our Vault and Jenkins blueprints on your own, and show us how you're using Vault in your own Autopilot Pattern applications!

Post written by Tim Gross