Introduction to ContainerPilot for building applications

A lot has been written about the benefits of the Autopilot Pattern and ContainerPilot, including most recently how to use Node.js with it. There’s something to be said about enabling developers to focus on building better applications instead of worrying about scaling, startup, shutdown, and recovery from anticipated failures. The Autopilot Pattern approach to orchestration works with the scheduler of your choice, from complex solutions like Kubernetes or Mesos to solutions as simple and developer-friendly as Docker Compose to automate the configuration of applications in separate containers. It handles stateless containers as well as those with persistent data, and it’s portable to any container infrastructure, from your local machine to large-scale clouds, and everywhere in between.

To better grasp the ease of using ContainerPilot to build Autopilot Pattern apps, we’ve designed a Hello World application using two small Node.js services with Nginx powering the front-end.

tl’dr: check out our Hello World application on GitHub

Diving deeper into ContainerPilot

ContainerPilot uses a JSON configuration file inside of your containers to automate discovery and configuration of an application according to the Autopilot Pattern. This file specifies the what service(s) the container provides, health checks for those services, as well as the service backends—the services provided by other containers that this container depends on.

There are four main sections to the config file:

consul: information about how to connect to Consul, which will provide consistent feedback about the inner workings of your infrastructure via your services’ TTL heartbeatspreStart: an event defining a command to run before starting the main application; additional user-defined events includepreStop,postStop,health,onChange, and other periodic tasksservices: what this container provides to other containers in the applicationbackends: the otherservicesthat this container depends on, with configuration specifying how to monitor them and what to do in the application if the services change

Each container has its own configuration file. The configuration is simple, and most of it specifies what service(s) the container provides (the services) and what other service(s) it depends on (the backends). See the configuration for Nginx for a complete example. But, if your container has no backends it can be even simpler. Both the Hello and World services don't have any dependencies. Therefore their configuration file only has a services section.

When services change, it’s important that anything that depended on them is notified and change accordingly. In particular, a feature of backends is the onChange event. For this project, we’ll be reloading Nginx when Hello or World change. More about that later.

Container lifecycle

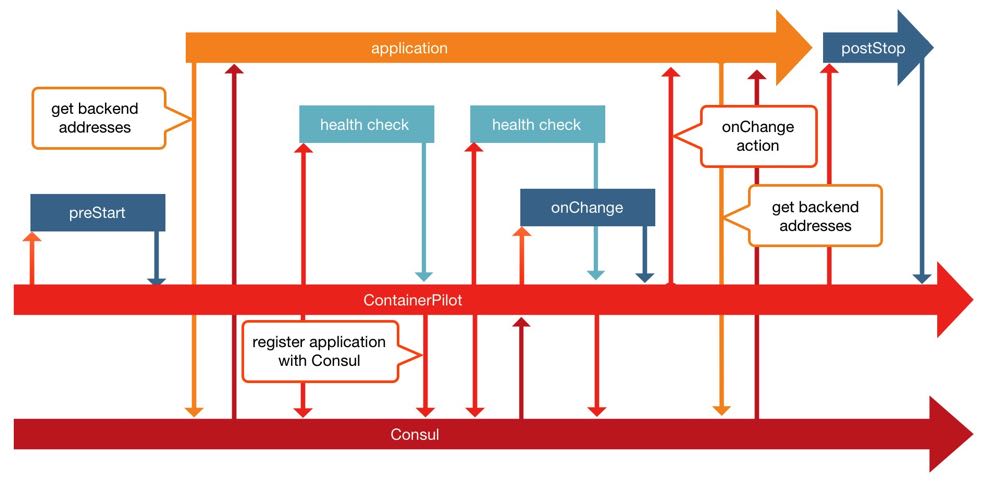

ContainerPilot adds awareness of container lifecycle to your application. What needs to happen inside the container as the service starts or as you shut it down? Do you need to do things periodically while the service is running? All of this and more is done with preStart, postStop, health, onChange, and other events declared in the configuration file. ContainerPilot runs all these tasks automatically, so you don't have to.

The above diagram of the container lifecycle explains when ContainerPilot triggers different events and calls the executables you specify in the containerpilot.json configuration file.

Building our application

Understanding the file structure

At the top level, there’s a docker-compose.yml file which, as already mentioned, describes how to put the containers together. Beyond that, are the directories for each of the services that comprise the application.

There could be as many services, and for that matter service directories, as you wish. Each service may or may not interact with the other services, depending on their role. There may be a database which has no dependencies by itself, but a webserver which relies on that database to provide content. For Hello World, we’ve used Nginx to serve static files and two separate Node.js services to provide content. Our file structure looks like this:

README.mddocker-compose.ymlnginxcontainerpilot.jsonDockerfilenginx.confnginx.conf.ctmplreload-nginx.shindex.htmlstyle.css

hellocontainerpilot.jsonDockerfilepackage.jsonindex.js

worldcontainerpilot.jsonDockerfilepackage.jsonindex.js

Each container needs a containerpilot.json and a Dockerfile. The Dockerfile describes how to construct each image.

Building Hello & World

The key components of the Hello World project are two services: hello and world. These services are built using Node.js without any external dependencies. Both service directories have the following key files:

containerpilot.jsonDockerfile: describes the image including a (minimal Node.js image](https://hub.docker.com/r/mhart/alpine-node/), ContainerPilot, and the Node.js service filespackage.json: the details about your service including a name, the version of npm, author, dependencies, etcindex.js: the Node.js service

For our Hello service, the containerpilot.json file looks like this:

{ "consul": "consul:8500", "services": [ { "name": "hello", "port": 3001, "health": "/usr/bin/curl -o /dev/null --fail -s http://localhost:3001/", "poll": 3, "ttl": 10 } ]}It’s short and simple. The whole point of the file is to configure Hello to be ready for inclusion within the greater application. This includes adding Consul access as well as declaring the container with:

name: name of the serviceport: port for the servicehealth: how to check if the service runningpoll: seconds between health checks (and how frequently we "heartbeat")ttl: the time to live after the last successful health check (or "heartbeat")

The only difference between Hello and World’s configuration is the name and the port number (World’s port is 3002).

Building the front-end

We’ve gone with Nginx to power the front-end of our application, which includes a ContainerPilot configuration file, a Dockerfile, configuration of Nginx, as well as the index.html file that will be the face of the application. The containerpilot.json file has much more going on than Hello and World did:

{ "consul": "consul:8500", "preStart": "/bin/reload-nginx.sh preStart", "logging": { "level": "DEBUG", "format": "text" }, "services": [ { "name": "nginx", "port": 80, "interfaces": ["eth1", "eth0"], "health": "/usr/bin/curl -o /dev/null --fail -s http://localhost/health", "poll": 10, "ttl": 25 } ], "backends": [ { "name": "hello", "poll": 3, "onChange": "/bin/reload-nginx.sh onChange" }, { "name": "world", "poll": 3, "onChange": "/bin/reload-nginx.sh onChange" } ]}Like Hello and World, Nginx connects to Consul on port 8500. Before Nginx starts, a preStart action is invoked to generate the Nginx configuration file. The action is almost the same thing we do in the onChange event when the backends change.

The configuration file specifies that both the Hello and World services depend on – an onChange configuration declares that when these backends change, Nginx should update and reload its configuration.

One Compose to rule them all

At the core of this application is the Docker Compose file, docker-compose.yml, which defines the containers, networks and volumes. It’s what will get our application up and running on our local machine and on Triton:

nginx: build: nginx/ ports: - "80:80" links: - consul:consul restart: alwayshello: build: hello/ links: - consul:consulworld: build: world/ links: - consul:consulconsul: image: consul:latest restart: always ports: - "8500:8500"The file specifies to build the Nginx image from the Nginx directory and to map the internal port 80 to the host machines port 80. Setting links will update the hosts file with an entry for consul. This example uses the official Consul image; however, when you move to production, the recommendation is to use multiple Consul instances. There is an Autopilot Pattern Consul image, which helps when building highly available applications that require multiple Consul instances.

Both Hello and World are built and linked to the Consul as well.1 Finally, Consul has its port 8500 mapped to the docker hosts port 8500, so it's accessible for more information about the health of the application.

Run it!

The Docker Compose tool is all it takes to build and run the application. Open your terminal and run docker-compose up -d to start Hello World.

Starting helloworld_consul_1Starting helloworld_hello_1Starting helloworld_world_1Starting helloworld_frontend_1Attaching to helloworld_consul_1, helloworld_hello_1, helloworld_world_1, helloworld_frontend_1[...]consul_1 | ==> Log data will now stream in as it occurs:[...]frontend_1 | time="2016-09-02T20:45:53Z" level=debug msg="preStart.RunAndWait start"frontend_1 | time="2016-09-02T20:45:53Z" level=debug msg=preStart.Cmd.Run[...]frontend_1 | time="2016-09-02T20:46:13Z" level=debug msg="nginx.health.run complete"To see and follow the logged output run docker-compose logs -f2. There will be continuous feedback in the terminal until you stop the application or stop following the output.

The Consul dashboard reports the services that are part of the catalog and their health. Below is a screenshot of the Consul dashboard showing the services and their health checks.

Start building self-reliant applications

The Autopilot Pattern automates the tedious operational tasks, including startup, shutdown, scaling, and recovery from failure, to make applications more reliable, productive, and easy to use. When applications get configured statically, it's harder to add new instances or work around failures. Dynamic configuration helps applications to scale and deal with failures. Adding self-assembly and self-management features to your applications with ContainerPilot saves time and energy that's better used elsewhere, and saves us from having to rewrite our applications to be as reliable and scalable as we need.

ContainerPilot is designed to be the solution for integrating the Autopilot Pattern in your container-based application, helping to build with consideration for legacy and greenfield applications. With ContainerPilot, your services can remain in separate containers with automatic configuration and discovery.

Perhaps most appealing, ContainerPilot is portable across any container-based infrastructure; it will run the same on your laptop as it does on Triton’s bare metal.

You can check out our Hello World application on GitHub, dig deeper into the source code and start your project. Make your life easier for testing and deploying your application by building with ContainerPilot.

In a real world use of ContainerPilot, you'll want to avoid

linksand use a CNS instead.linksare great for a demo, but not so great for a highly available application. ↩When you run

docker-compose logs -fon Triton you'll see an error message about Docker events. You can safely ignore that message. ↩

Post written by Alexandra White and Wyatt Lyon Preul